DeepSeek R1, a generative artificial intelligence funded and developed by Chinese hedge fund High-Flyer, has taken the world by storm. Since its release on the 20th of January, it has climbed up the US Apple App store as the most downloaded app in the country—a testament to the public’s excitement about the capabilities of the product.

Source: Apple App Store

According to benchmark results reported in a paper published by DeepSeek, R1 performs on par with—or even surpasses—OpenAI’s GPT o1 model in tasks such as math, coding, and scientific reasoning. It achieves this through three innovative methods:

Chain of Thought Prompts: The model is prompted to outline its reasoning step-by-step, allowing it to self-correct and refine its answers.

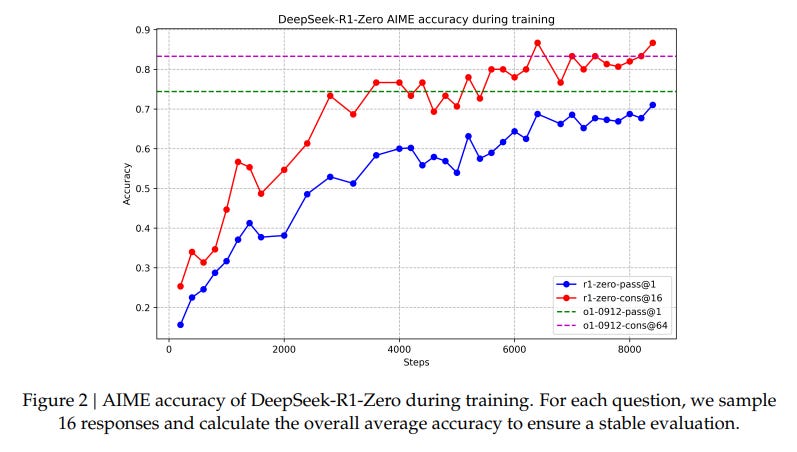

Reinforcement Learning: Instead of relying solely on human-verified right-or-wrong answers, DeepSeek R1 explores solutions and "rewards" itself for achieving better accuracy over time.

Model Distillation: After training a massive 671-billion-parameter model, the team compresses (or "distills") its knowledge into smaller versions (with as few as 7 billion parameters), making it faster and cheaper to deploy.

In short, DeepSeek R1 was created to push large language models (LLMs) toward higher accuracy, adaptability, and efficiency. Their overarching vision is to democratize access to powerful language models by open-sourcing large parts of the model’s training recipes and reducing the hardware and data barriers that have historically limited research to well-funded organizations. This commitment to openness and efficiency is where DeepSeek’s greatest strength lies.

Source: DeepSeek research paper

While the official paper doesn’t include budget details, some strategies suggest how they’ve achieved scale at a lower cost:

DeepSeek R1 uses a smart design called "Distillation-Ready Design," where they create smaller versions of their main model, known as "student" models. These smaller models are easier to run because they don’t require as much computer power. This not only lowers the costs but also makes the technology available for more types of uses, allowing more people and businesses to take advantage of it.

Another key method they use is called "Pure Reinforcement Learning." Instead of depending heavily on large amounts of data that humans have to label and organize, R1 trains its models to learn and improve on their own. This means they spend less money on gathering and preparing data, making the overall development process cheaper and more efficient.

Additionally, R1 benefits from algorithmic breakthroughs such as multi-token prediction and model distillation, a technique that transfers knowledge from a larger model to a smaller one. These innovations streamline both training and inference processes, ensuring high performance while maintaining cost efficiency.

Source: DeepSeek research paper

OpenAI’s latest advanced models, such as o1 and the new Operator feature, reportedly involve substantial R&D budgets and ongoing operational costs. The drive to raise capital for continuous improvement can run into billions of dollars, partly due to:

Vast data scraping and storage requirements

Extensive human feedback loops (labeling, evaluations)

Proprietary hardware or partnerships

In contrast, DeepSeek R1’s approach demonstrates how government-supported research and more cost-effective training methods can achieve strong performance at a significantly lower price. If these claims are validated through further peer review, it could signal a shift toward making advanced AI more affordable and widely accessible, potentially challenging the business models of major Western companies.

DeepSeek’s disruption is already creating ripples in the industry, with companies like NVIDIA experiencing stock price fluctuations—potentially linked to the growing appeal of DeepSeek’s approach. While this may simply be a market reaction, it highlights the impact DeepSeek is having on the industry and suggests that traditional players may need to adapt to remain competitive.

Despite efforts by the American government to regulate or limit Chinese AI advancements, companies like DeepSeek have found ways to innovate efficiently. This reinforces the notion that attempts to stifle strong competitors can push them to discover more efficient or unconventional methods to innovate and grow. In the long run, such strategies are counterproductive, potentially harming consumers in the local market by forcing them to pay for products that may lack the same level of cost-efficiency.

Source: CNBC

While some skeptics wonder if DeepSeek R1 is "just GPT by another name", industry observers, such as Perplexity AI’s co-founder, Aravind Srinivas argues otherwise. Srinivas told CNBC in a recent interview that DeepSeek R1 is “more than a simple copy,” emphasizing that China is also innovating and “sharing science.” He also mentioned that his company is already using DeepSeek to understand what they can learn from it to implement in their product offerings.

DeepSeek R1’s launch highlights the fact that AI innovation is now a global effort. It showcases both technical novelty and cost-effectiveness, offering a compelling alternative or complement to OpenAI’s models.

While it’s not a simple copy, it also isn’t an entirely isolated effort. The project builds on shared AI research and pushes the limits of what new entrants can achieve with collaborative science and technology. Ultimately, this competition benefits users and researchers worldwide, accelerating AI capabilities for everyone.

References

High-Flyer. (n.d.). Wikipedia. Retrieved January 27, 2025, from https://en.wikipedia.org/wiki/High-Flyer

DeepSeek R1 Paper. (2025). Retrieved from https://arxiv.org/abs/2501.12948

Srinivas, A. (2025, January 26). Perplexity AI co-founder interview. CNBC ; How China’s New AI Model DeepSeek Is Threatening U.S. Dominance

DeepSeek AI. (2025). DeepSeek R1 GitHub Repository. Retrieved January 27, 2025, from https://github.com/deepseek-ai/DeepSeek-R1

Reuters. (2025, January 27). DeepSeek sparks AI stock selloff; Nvidia posts record market-cap. Retrieved from https://www.reuters.com/technology/chinas-deepseek-sets-off-ai-market-rout-2025-01-27/

From all the demos that I have been seeing, I almost prefer deepseek